Tensorflow 2.0 学习笔记

2020-06-01

2 常数、变量、矩阵相乘、梯度下降

参考 Hull (2019)

2.1 Use tensorflow like numpy

(2, 3) <dtype: 'float32'>tf.Tensor([2. 5.], shape=(2,), dtype=float32)

tf.Tensor([2. 5.], shape=(2,), dtype=float32)tf.Tensor(

[[11. 12. 13.]

[14. 15. 16.]], shape=(2, 3), dtype=float32)

tf.Tensor(

[[ 1. 4. 9.]

[16. 25. 36.]], shape=(2, 3), dtype=float32)o

2.2 Constants

2.0.0-alpha0# Define a 3x4 tensor with all values equal to 9

x = fill([3, 4], 9)

# Define a tensor of ones with the same shape as x

y = ones_like(x)

print(x.numpy())

print(y.numpy())[[9 9 9 9]

[9 9 9 9]

[9 9 9 9]]

[[1 1 1 1]

[1 1 1 1]

[1 1 1 1]]# Define the one-dimensional vector, z

z = constant([1, 2, 3, 4])

# Print z as a numpy array

print(z.numpy())[1 2 3 4]2.3 Variables

array([1, 2, 3, 4], dtype=int32)这里有一个性质,constants 是不能发生改变的,但是 variables 是可以的。

2.4 Element-wise multiplication

Element-wise multiplication in TensorFlow is performed using two tensors with identical shapes. This is because the operation multiplies elements in corresponding positions in the two tensors.

元素运算需要 dim 一致,因为需要位置一致。

\[\left[ \begin{array}{ll}{1} & {2} \\ {2} & {1}\end{array}\right] \odot \left[ \begin{array}{ll}{3} & {1} \\ {2} & {5}\end{array}\right]=\left[ \begin{array}{ll}{3} & {2} \\ {4} & {5}\end{array}\right]\]

# Define tensors A0 and B0 as constants

A0 = constant([1, 2, 3, 4])

B0 = constant([[1, 2, 3], [1, 6, 4]])

# Define A1 and B1 to have the correct shape

A1 = ones_like(A0)

B1 = ones_like(B0)

# Perform element-wise multiplication

A2 = multiply(A0,A1)

B2 = multiply(B0,B1)

# Print the tensors A2 and B2

print(A2.numpy())

print(B2.numpy())[1 2 3 4]

[[1 2 3]

[1 6 4]]2.5 Matrix multiplication

X = constant([[1, 2], [2, 1], [5, 8], [6, 10]])

b = constant([[1], [2]])

y = constant([[6], [4], [20], [23]])[[ 1]

[ 0]

[-1]

[-3]]Nice job! Understanding matrix multiplication will make things simpler when we start making predictions with linear models.

OLS 回归的本质就是矩阵相乘。

2.6 group by to agg

reduce_sum(A, i)sums over dimension i

\[W=\left[ \begin{array}{ccccc}{11} & {7} & {4} & {3} & {25} \\ {50} & {2} & {60} & {0} & {10}\end{array}\right]\]

[61 9 64 3 35]

[ 50 122]Understanding how to sum over tensor dimensions will be helpful when preparing datasets and training models.

汇总运算便于计算。

2.7 reshape

\[H = \left[ \begin{array}{ccc}{255} & {0} & {255} \\ {255} & {255} & {255} \\ {255} & {0} & {255}\end{array}\right]\]

a 9-pixel, grayscale image of the letter H

比如这个 \(H\),需要将其变成一维数据。

array([[255],

[ 0],

[255],

[255],

[255],

[255],

[255],

[ 0],

[255]], dtype=int32)reshape 可以帮助对输入进行处理。

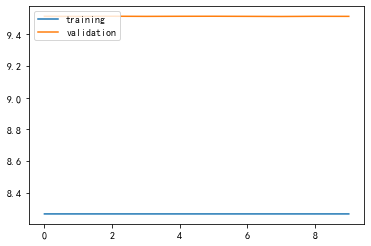

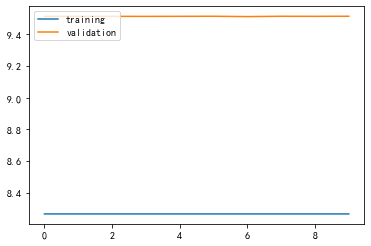

2.8 Gradient descent

这里可以进行求导。

array([0.], dtype=float32)在 Win7上可以安装,但是在执行import tensorflow时,Python会闪退,因此推荐搭建虚拟机,用Linux系统,见

Linux 学习笔记

2.9 constant 和 variable 的差异

一般地,不会手动建立 tf.Variable。tf.Variable类似于tf.constant,但是后者不能被修改,前者可以。

方便使用者在进行反向传播更新 weights 时,使用。

<tf.Variable 'UnreadVariable' shape=(2, 3) dtype=int32, numpy=

array([[-3, -2, -1],

[ 0, 1, 2]], dtype=int32)><tf.Variable 'Variable:0' shape=(2, 3) dtype=int32, numpy=

array([[-3, -2, -1],

[ 0, 1, 2]], dtype=int32)>3 线性回归

参考 Hull (2019)

3.1 定义变量类型

类 sql 的写法。

'2.0.0-alpha0'3.2 乘法

count 2.161300e+04

mean 5.400881e+05

std 3.671272e+05

min 7.500000e+04

25% 3.219500e+05

50% 4.500000e+05

75% 6.450000e+05

max 7.700000e+06

Name: price, dtype: float64<tf.Tensor: id=2, shape=(21613,), dtype=float32, numpy=

array([22190. , 53800. , 18000. , ..., 40210.1, 40000. , 32500. ],

dtype=float32)>为什么这里定义了 pd.Series 就可以广播了?

如果不是 pd.Series 那么就要把 scalar 设置成 tf.Variable。

<tf.Tensor: id=48, shape=(3,), dtype=float32, numpy=array([0.1, 0.2, 0.3], dtype=float32)>3.3 loss function

size = housing['sqft_living']

beta0 = 1

beta1 = 1

yhat = beta0 + beta1 * size

tf.keras.losses.mse(price, yhat).numpy()-98150这是损失函数的定义,那么只要找到一个优化函数就可以迭代了。

3.4 定义损失函数

这个函数太有启发了。

这样很有启发,帮助理解。

def loss_functon(y, yhat):

error = y - yhat

is_large = tf.abs(error) > 1

squared_loss = tf.square(error)/2

linear_loss = tf.abs(error) - 0.5

return tf.where(is_large, linear_loss, squared_loss)这里可以自定义损失函数,error 太大用 linear,太小用squared,这样损失函数就可以很自定义化。(Géron 2019)

3.5 实现线性回归

One of the benefits of using tensorflow is that you have the option to customize models down to the linear algebraic-level, as we’ve shown in the last two exercises (Hull 2019)

tensorflow 可以帮助在线性代数的层面上理解神经网络。

# define constants and variables

price = tf.cast(housing['price'], tf.float32) # constants

size = tf.cast(housing['sqft_living'], tf.float32) # constants

beta0 = tf.Variable(0.1, tf.float32)

beta1 = tf.Variable(0.1, tf.float32)其中的 0.1 是初始值。

# define loss function

def loss_function(beta0, beta1, price, size):

yhat = beta0 + beta1 * size

loss = tf.keras.losses.mse(price, yhat)

return loss<tf.Variable 'UnreadVariable' shape=() dtype=int64, numpy=1>0.101 0.101这是迭代了一次的结果。

如何理解这个 for loop?优化汉书根据损失函数定义的损失进行最小化,然后只不过再print出来每次迭代的结果。当然最后的beta0和beta1是有湖

# for loops to get the best one

for i in range(1000):

opt.minimize(lambda: loss_function(beta0, beta1, price, size),\

var_list=[beta0, beta1])

print(loss_function(beta0, beta1, price, size))

print(beta0.numpy(), beta1.numpy())tf.Tensor(426196570000.0, shape=(), dtype=float32)

tf.Tensor(426193780000.0, shape=(), dtype=float32)

tf.Tensor(426191160000.0, shape=(), dtype=float32)

tf.Tensor(426188370000.0, shape=(), dtype=float32)

tf.Tensor(426185620000.0, shape=(), dtype=float32)

tf.Tensor(426182930000.0, shape=(), dtype=float32)

tf.Tensor(426180280000.0, shape=(), dtype=float32)

tf.Tensor(426177530000.0, shape=(), dtype=float32)

tf.Tensor(426174800000.0, shape=(), dtype=float32)

tf.Tensor(426172060000.0, shape=(), dtype=float32)

tf.Tensor(426169400000.0, shape=(), dtype=float32)

tf.Tensor(426166650000.0, shape=(), dtype=float32)

tf.Tensor(426163860000.0, shape=(), dtype=float32)

tf.Tensor(426161180000.0, shape=(), dtype=float32)

tf.Tensor(426158520000.0, shape=(), dtype=float32)

tf.Tensor(426155840000.0, shape=(), dtype=float32)

tf.Tensor(426153050000.0, shape=(), dtype=float32)

tf.Tensor(426150360000.0, shape=(), dtype=float32)

tf.Tensor(426147600000.0, shape=(), dtype=float32)

tf.Tensor(426144900000.0, shape=(), dtype=float32)

tf.Tensor(426142100000.0, shape=(), dtype=float32)

tf.Tensor(426139420000.0, shape=(), dtype=float32)

tf.Tensor(426136730000.0, shape=(), dtype=float32)

tf.Tensor(426133980000.0, shape=(), dtype=float32)

tf.Tensor(426131230000.0, shape=(), dtype=float32)

tf.Tensor(426128540000.0, shape=(), dtype=float32)

tf.Tensor(426125820000.0, shape=(), dtype=float32)

tf.Tensor(426123100000.0, shape=(), dtype=float32)

tf.Tensor(426120450000.0, shape=(), dtype=float32)

tf.Tensor(426117760000.0, shape=(), dtype=float32)

tf.Tensor(426114970000.0, shape=(), dtype=float32)

tf.Tensor(426112250000.0, shape=(), dtype=float32)

tf.Tensor(426109570000.0, shape=(), dtype=float32)

tf.Tensor(426106850000.0, shape=(), dtype=float32)

tf.Tensor(426104100000.0, shape=(), dtype=float32)

tf.Tensor(426101370000.0, shape=(), dtype=float32)

tf.Tensor(426098700000.0, shape=(), dtype=float32)

tf.Tensor(426095870000.0, shape=(), dtype=float32)

tf.Tensor(426093180000.0, shape=(), dtype=float32)

tf.Tensor(426090430000.0, shape=(), dtype=float32)

tf.Tensor(426087780000.0, shape=(), dtype=float32)

tf.Tensor(426085100000.0, shape=(), dtype=float32)

tf.Tensor(426082400000.0, shape=(), dtype=float32)

tf.Tensor(426079620000.0, shape=(), dtype=float32)

tf.Tensor(426076930000.0, shape=(), dtype=float32)

tf.Tensor(426074140000.0, shape=(), dtype=float32)

tf.Tensor(426071430000.0, shape=(), dtype=float32)

tf.Tensor(426068670000.0, shape=(), dtype=float32)

tf.Tensor(426065950000.0, shape=(), dtype=float32)

tf.Tensor(426063270000.0, shape=(), dtype=float32)

tf.Tensor(426060550000.0, shape=(), dtype=float32)

tf.Tensor(426057860000.0, shape=(), dtype=float32)

tf.Tensor(426055070000.0, shape=(), dtype=float32)

tf.Tensor(426052350000.0, shape=(), dtype=float32)

tf.Tensor(426049700000.0, shape=(), dtype=float32)

tf.Tensor(426047000000.0, shape=(), dtype=float32)

tf.Tensor(426044300000.0, shape=(), dtype=float32)

tf.Tensor(426041670000.0, shape=(), dtype=float32)

tf.Tensor(426038900000.0, shape=(), dtype=float32)

tf.Tensor(426036100000.0, shape=(), dtype=float32)

tf.Tensor(426033400000.0, shape=(), dtype=float32)

tf.Tensor(426030700000.0, shape=(), dtype=float32)

tf.Tensor(426027940000.0, shape=(), dtype=float32)

tf.Tensor(426025220000.0, shape=(), dtype=float32)

tf.Tensor(426022470000.0, shape=(), dtype=float32)

tf.Tensor(426019800000.0, shape=(), dtype=float32)

tf.Tensor(426017060000.0, shape=(), dtype=float32)

tf.Tensor(426014340000.0, shape=(), dtype=float32)

tf.Tensor(426011660000.0, shape=(), dtype=float32)

tf.Tensor(426008970000.0, shape=(), dtype=float32)

tf.Tensor(426006200000.0, shape=(), dtype=float32)

tf.Tensor(426003500000.0, shape=(), dtype=float32)

tf.Tensor(426000700000.0, shape=(), dtype=float32)

tf.Tensor(425998020000.0, shape=(), dtype=float32)

tf.Tensor(425995270000.0, shape=(), dtype=float32)

tf.Tensor(425992600000.0, shape=(), dtype=float32)

tf.Tensor(425989830000.0, shape=(), dtype=float32)

tf.Tensor(425987200000.0, shape=(), dtype=float32)

tf.Tensor(425984430000.0, shape=(), dtype=float32)

tf.Tensor(425981740000.0, shape=(), dtype=float32)

tf.Tensor(425979020000.0, shape=(), dtype=float32)

tf.Tensor(425976270000.0, shape=(), dtype=float32)

tf.Tensor(425973600000.0, shape=(), dtype=float32)

tf.Tensor(425970830000.0, shape=(), dtype=float32)

tf.Tensor(425968140000.0, shape=(), dtype=float32)

tf.Tensor(425965360000.0, shape=(), dtype=float32)

tf.Tensor(425962700000.0, shape=(), dtype=float32)

tf.Tensor(425959980000.0, shape=(), dtype=float32)

tf.Tensor(425957260000.0, shape=(), dtype=float32)

tf.Tensor(425954500000.0, shape=(), dtype=float32)

tf.Tensor(425951820000.0, shape=(), dtype=float32)

tf.Tensor(425949070000.0, shape=(), dtype=float32)

tf.Tensor(425946320000.0, shape=(), dtype=float32)

tf.Tensor(425943600000.0, shape=(), dtype=float32)

tf.Tensor(425940900000.0, shape=(), dtype=float32)

tf.Tensor(425938300000.0, shape=(), dtype=float32)

tf.Tensor(425935540000.0, shape=(), dtype=float32)

tf.Tensor(425932750000.0, shape=(), dtype=float32)

tf.Tensor(425930030000.0, shape=(), dtype=float32)

tf.Tensor(425927340000.0, shape=(), dtype=float32)

tf.Tensor(425924620000.0, shape=(), dtype=float32)

tf.Tensor(425921940000.0, shape=(), dtype=float32)

tf.Tensor(425919250000.0, shape=(), dtype=float32)

tf.Tensor(425916470000.0, shape=(), dtype=float32)

tf.Tensor(425913780000.0, shape=(), dtype=float32)

tf.Tensor(425911060000.0, shape=(), dtype=float32)

tf.Tensor(425908200000.0, shape=(), dtype=float32)

tf.Tensor(425905600000.0, shape=(), dtype=float32)

tf.Tensor(425902870000.0, shape=(), dtype=float32)

tf.Tensor(425900180000.0, shape=(), dtype=float32)

tf.Tensor(425897500000.0, shape=(), dtype=float32)

tf.Tensor(425894700000.0, shape=(), dtype=float32)

tf.Tensor(425892000000.0, shape=(), dtype=float32)

tf.Tensor(425889230000.0, shape=(), dtype=float32)

tf.Tensor(425886550000.0, shape=(), dtype=float32)

tf.Tensor(425883830000.0, shape=(), dtype=float32)

tf.Tensor(425881200000.0, shape=(), dtype=float32)

tf.Tensor(425878420000.0, shape=(), dtype=float32)

tf.Tensor(425875640000.0, shape=(), dtype=float32)

tf.Tensor(425872950000.0, shape=(), dtype=float32)

tf.Tensor(425870200000.0, shape=(), dtype=float32)

tf.Tensor(425867540000.0, shape=(), dtype=float32)

tf.Tensor(425864800000.0, shape=(), dtype=float32)

tf.Tensor(425862100000.0, shape=(), dtype=float32)

tf.Tensor(425859400000.0, shape=(), dtype=float32)

tf.Tensor(425856660000.0, shape=(), dtype=float32)

tf.Tensor(425853980000.0, shape=(), dtype=float32)

tf.Tensor(425851300000.0, shape=(), dtype=float32)

tf.Tensor(425848500000.0, shape=(), dtype=float32)

tf.Tensor(425845820000.0, shape=(), dtype=float32)

tf.Tensor(425843100000.0, shape=(), dtype=float32)

tf.Tensor(425840350000.0, shape=(), dtype=float32)

tf.Tensor(425837560000.0, shape=(), dtype=float32)

tf.Tensor(425834870000.0, shape=(), dtype=float32)

tf.Tensor(425832220000.0, shape=(), dtype=float32)

tf.Tensor(425829530000.0, shape=(), dtype=float32)

tf.Tensor(425826780000.0, shape=(), dtype=float32)

tf.Tensor(425824060000.0, shape=(), dtype=float32)

tf.Tensor(425821370000.0, shape=(), dtype=float32)

tf.Tensor(425818550000.0, shape=(), dtype=float32)

tf.Tensor(425815870000.0, shape=(), dtype=float32)

tf.Tensor(425813100000.0, shape=(), dtype=float32)

tf.Tensor(425810460000.0, shape=(), dtype=float32)

tf.Tensor(425807770000.0, shape=(), dtype=float32)

tf.Tensor(425805000000.0, shape=(), dtype=float32)

tf.Tensor(425802300000.0, shape=(), dtype=float32)

tf.Tensor(425799600000.0, shape=(), dtype=float32)

tf.Tensor(425796830000.0, shape=(), dtype=float32)

tf.Tensor(425794140000.0, shape=(), dtype=float32)

tf.Tensor(425791460000.0, shape=(), dtype=float32)

tf.Tensor(425788670000.0, shape=(), dtype=float32)

tf.Tensor(425786000000.0, shape=(), dtype=float32)

tf.Tensor(425783260000.0, shape=(), dtype=float32)

tf.Tensor(425780540000.0, shape=(), dtype=float32)

tf.Tensor(425777860000.0, shape=(), dtype=float32)

tf.Tensor(425775140000.0, shape=(), dtype=float32)

tf.Tensor(425772400000.0, shape=(), dtype=float32)

tf.Tensor(425769700000.0, shape=(), dtype=float32)

tf.Tensor(425767000000.0, shape=(), dtype=float32)

tf.Tensor(425764360000.0, shape=(), dtype=float32)

tf.Tensor(425761570000.0, shape=(), dtype=float32)

tf.Tensor(425758800000.0, shape=(), dtype=float32)

tf.Tensor(425756130000.0, shape=(), dtype=float32)

tf.Tensor(425753380000.0, shape=(), dtype=float32)

tf.Tensor(425750700000.0, shape=(), dtype=float32)

tf.Tensor(425747940000.0, shape=(), dtype=float32)

tf.Tensor(425745250000.0, shape=(), dtype=float32)

tf.Tensor(425742530000.0, shape=(), dtype=float32)

tf.Tensor(425739800000.0, shape=(), dtype=float32)

tf.Tensor(425737130000.0, shape=(), dtype=float32)

tf.Tensor(425734340000.0, shape=(), dtype=float32)

tf.Tensor(425731600000.0, shape=(), dtype=float32)

tf.Tensor(425728970000.0, shape=(), dtype=float32)

tf.Tensor(425726250000.0, shape=(), dtype=float32)

tf.Tensor(425723500000.0, shape=(), dtype=float32)

tf.Tensor(425720770000.0, shape=(), dtype=float32)

tf.Tensor(425718050000.0, shape=(), dtype=float32)

tf.Tensor(425715300000.0, shape=(), dtype=float32)

tf.Tensor(425712600000.0, shape=(), dtype=float32)

tf.Tensor(425709900000.0, shape=(), dtype=float32)

tf.Tensor(425707180000.0, shape=(), dtype=float32)

tf.Tensor(425704420000.0, shape=(), dtype=float32)

tf.Tensor(425701700000.0, shape=(), dtype=float32)

tf.Tensor(425698950000.0, shape=(), dtype=float32)

tf.Tensor(425696300000.0, shape=(), dtype=float32)

tf.Tensor(425693600000.0, shape=(), dtype=float32)

tf.Tensor(425690860000.0, shape=(), dtype=float32)

tf.Tensor(425688240000.0, shape=(), dtype=float32)

tf.Tensor(425685450000.0, shape=(), dtype=float32)

tf.Tensor(425682760000.0, shape=(), dtype=float32)

tf.Tensor(425679980000.0, shape=(), dtype=float32)

tf.Tensor(425677300000.0, shape=(), dtype=float32)

tf.Tensor(425674600000.0, shape=(), dtype=float32)

tf.Tensor(425671850000.0, shape=(), dtype=float32)

tf.Tensor(425669100000.0, shape=(), dtype=float32)

tf.Tensor(425666380000.0, shape=(), dtype=float32)

tf.Tensor(425663700000.0, shape=(), dtype=float32)

tf.Tensor(425660900000.0, shape=(), dtype=float32)

tf.Tensor(425658220000.0, shape=(), dtype=float32)

tf.Tensor(425655530000.0, shape=(), dtype=float32)

tf.Tensor(425652850000.0, shape=(), dtype=float32)

tf.Tensor(425650200000.0, shape=(), dtype=float32)

tf.Tensor(425647440000.0, shape=(), dtype=float32)

tf.Tensor(425644720000.0, shape=(), dtype=float32)

tf.Tensor(425641930000.0, shape=(), dtype=float32)

tf.Tensor(425639250000.0, shape=(), dtype=float32)

tf.Tensor(425636530000.0, shape=(), dtype=float32)

tf.Tensor(425633780000.0, shape=(), dtype=float32)

tf.Tensor(425631100000.0, shape=(), dtype=float32)

tf.Tensor(425628370000.0, shape=(), dtype=float32)

tf.Tensor(425625650000.0, shape=(), dtype=float32)

tf.Tensor(425622900000.0, shape=(), dtype=float32)

tf.Tensor(425620180000.0, shape=(), dtype=float32)

tf.Tensor(425617500000.0, shape=(), dtype=float32)

tf.Tensor(425614770000.0, shape=(), dtype=float32)

tf.Tensor(425612020000.0, shape=(), dtype=float32)

tf.Tensor(425609330000.0, shape=(), dtype=float32)

tf.Tensor(425606640000.0, shape=(), dtype=float32)

tf.Tensor(425604000000.0, shape=(), dtype=float32)

tf.Tensor(425601240000.0, shape=(), dtype=float32)

tf.Tensor(425598520000.0, shape=(), dtype=float32)

tf.Tensor(425595830000.0, shape=(), dtype=float32)

tf.Tensor(425593040000.0, shape=(), dtype=float32)

tf.Tensor(425590360000.0, shape=(), dtype=float32)

tf.Tensor(425587640000.0, shape=(), dtype=float32)

tf.Tensor(425584950000.0, shape=(), dtype=float32)

tf.Tensor(425582100000.0, shape=(), dtype=float32)

tf.Tensor(425579450000.0, shape=(), dtype=float32)

tf.Tensor(425576760000.0, shape=(), dtype=float32)

tf.Tensor(425574070000.0, shape=(), dtype=float32)

tf.Tensor(425571300000.0, shape=(), dtype=float32)

tf.Tensor(425568600000.0, shape=(), dtype=float32)

tf.Tensor(425565880000.0, shape=(), dtype=float32)

tf.Tensor(425563130000.0, shape=(), dtype=float32)

tf.Tensor(425560440000.0, shape=(), dtype=float32)

tf.Tensor(425557800000.0, shape=(), dtype=float32)

tf.Tensor(425555000000.0, shape=(), dtype=float32)

tf.Tensor(425552300000.0, shape=(), dtype=float32)

tf.Tensor(425549530000.0, shape=(), dtype=float32)

tf.Tensor(425546900000.0, shape=(), dtype=float32)

tf.Tensor(425544160000.0, shape=(), dtype=float32)

tf.Tensor(425541470000.0, shape=(), dtype=float32)

tf.Tensor(425538680000.0, shape=(), dtype=float32)

tf.Tensor(425536000000.0, shape=(), dtype=float32)

tf.Tensor(425533340000.0, shape=(), dtype=float32)

tf.Tensor(425530600000.0, shape=(), dtype=float32)

tf.Tensor(425527800000.0, shape=(), dtype=float32)

tf.Tensor(425525120000.0, shape=(), dtype=float32)

tf.Tensor(425522460000.0, shape=(), dtype=float32)

tf.Tensor(425519700000.0, shape=(), dtype=float32)

tf.Tensor(425516920000.0, shape=(), dtype=float32)

tf.Tensor(425514300000.0, shape=(), dtype=float32)

tf.Tensor(425511620000.0, shape=(), dtype=float32)

tf.Tensor(425508900000.0, shape=(), dtype=float32)

tf.Tensor(425506100000.0, shape=(), dtype=float32)

tf.Tensor(425503420000.0, shape=(), dtype=float32)

tf.Tensor(425500740000.0, shape=(), dtype=float32)

tf.Tensor(425498020000.0, shape=(), dtype=float32)

tf.Tensor(425495270000.0, shape=(), dtype=float32)

tf.Tensor(425492550000.0, shape=(), dtype=float32)

tf.Tensor(425489920000.0, shape=(), dtype=float32)

tf.Tensor(425487070000.0, shape=(), dtype=float32)

tf.Tensor(425484350000.0, shape=(), dtype=float32)

tf.Tensor(425481670000.0, shape=(), dtype=float32)

tf.Tensor(425478980000.0, shape=(), dtype=float32)

tf.Tensor(425476260000.0, shape=(), dtype=float32)

tf.Tensor(425473570000.0, shape=(), dtype=float32)

tf.Tensor(425470820000.0, shape=(), dtype=float32)

tf.Tensor(425468100000.0, shape=(), dtype=float32)

tf.Tensor(425465380000.0, shape=(), dtype=float32)

tf.Tensor(425462630000.0, shape=(), dtype=float32)

tf.Tensor(425459900000.0, shape=(), dtype=float32)

tf.Tensor(425457220000.0, shape=(), dtype=float32)

tf.Tensor(425454530000.0, shape=(), dtype=float32)

tf.Tensor(425451800000.0, shape=(), dtype=float32)

tf.Tensor(425449060000.0, shape=(), dtype=float32)

tf.Tensor(425446380000.0, shape=(), dtype=float32)

tf.Tensor(425443660000.0, shape=(), dtype=float32)

tf.Tensor(425440940000.0, shape=(), dtype=float32)

tf.Tensor(425438250000.0, shape=(), dtype=float32)

tf.Tensor(425435560000.0, shape=(), dtype=float32)

tf.Tensor(425432780000.0, shape=(), dtype=float32)

tf.Tensor(425430100000.0, shape=(), dtype=float32)

tf.Tensor(425427300000.0, shape=(), dtype=float32)

tf.Tensor(425424620000.0, shape=(), dtype=float32)

tf.Tensor(425421830000.0, shape=(), dtype=float32)

tf.Tensor(425419180000.0, shape=(), dtype=float32)

tf.Tensor(425416500000.0, shape=(), dtype=float32)

tf.Tensor(425413800000.0, shape=(), dtype=float32)

tf.Tensor(425411120000.0, shape=(), dtype=float32)

tf.Tensor(425408430000.0, shape=(), dtype=float32)

tf.Tensor(425405640000.0, shape=(), dtype=float32)

tf.Tensor(425402930000.0, shape=(), dtype=float32)

tf.Tensor(425400240000.0, shape=(), dtype=float32)

tf.Tensor(425397520000.0, shape=(), dtype=float32)

tf.Tensor(425394770000.0, shape=(), dtype=float32)

tf.Tensor(425392050000.0, shape=(), dtype=float32)

tf.Tensor(425389360000.0, shape=(), dtype=float32)

tf.Tensor(425386570000.0, shape=(), dtype=float32)

tf.Tensor(425383900000.0, shape=(), dtype=float32)

tf.Tensor(425381200000.0, shape=(), dtype=float32)

tf.Tensor(425378480000.0, shape=(), dtype=float32)

tf.Tensor(425375800000.0, shape=(), dtype=float32)

tf.Tensor(425373070000.0, shape=(), dtype=float32)

tf.Tensor(425370320000.0, shape=(), dtype=float32)

tf.Tensor(425367540000.0, shape=(), dtype=float32)

tf.Tensor(425364900000.0, shape=(), dtype=float32)

tf.Tensor(425362130000.0, shape=(), dtype=float32)

tf.Tensor(425359440000.0, shape=(), dtype=float32)

tf.Tensor(425356720000.0, shape=(), dtype=float32)

tf.Tensor(425354070000.0, shape=(), dtype=float32)

tf.Tensor(425351300000.0, shape=(), dtype=float32)

tf.Tensor(425348630000.0, shape=(), dtype=float32)

tf.Tensor(425345940000.0, shape=(), dtype=float32)

tf.Tensor(425343160000.0, shape=(), dtype=float32)

tf.Tensor(425340470000.0, shape=(), dtype=float32)

tf.Tensor(425337780000.0, shape=(), dtype=float32)

tf.Tensor(425335100000.0, shape=(), dtype=float32)

tf.Tensor(425332300000.0, shape=(), dtype=float32)

tf.Tensor(425329600000.0, shape=(), dtype=float32)

tf.Tensor(425326900000.0, shape=(), dtype=float32)

tf.Tensor(425324180000.0, shape=(), dtype=float32)

tf.Tensor(425321500000.0, shape=(), dtype=float32)

tf.Tensor(425318700000.0, shape=(), dtype=float32)

tf.Tensor(425316000000.0, shape=(), dtype=float32)

tf.Tensor(425313240000.0, shape=(), dtype=float32)

tf.Tensor(425310550000.0, shape=(), dtype=float32)

tf.Tensor(425307870000.0, shape=(), dtype=float32)

tf.Tensor(425305200000.0, shape=(), dtype=float32)

tf.Tensor(425302460000.0, shape=(), dtype=float32)

tf.Tensor(425299740000.0, shape=(), dtype=float32)

tf.Tensor(425297050000.0, shape=(), dtype=float32)

tf.Tensor(425294330000.0, shape=(), dtype=float32)

tf.Tensor(425291580000.0, shape=(), dtype=float32)

tf.Tensor(425288860000.0, shape=(), dtype=float32)

tf.Tensor(425286100000.0, shape=(), dtype=float32)

tf.Tensor(425283400000.0, shape=(), dtype=float32)

tf.Tensor(425280700000.0, shape=(), dtype=float32)

tf.Tensor(425278000000.0, shape=(), dtype=float32)

tf.Tensor(425275330000.0, shape=(), dtype=float32)

tf.Tensor(425272600000.0, shape=(), dtype=float32)

tf.Tensor(425269920000.0, shape=(), dtype=float32)

tf.Tensor(425267230000.0, shape=(), dtype=float32)

tf.Tensor(425264450000.0, shape=(), dtype=float32)

tf.Tensor(425261660000.0, shape=(), dtype=float32)

tf.Tensor(425259000000.0, shape=(), dtype=float32)

tf.Tensor(425256320000.0, shape=(), dtype=float32)

tf.Tensor(425253570000.0, shape=(), dtype=float32)

tf.Tensor(425250880000.0, shape=(), dtype=float32)

tf.Tensor(425248130000.0, shape=(), dtype=float32)

tf.Tensor(425245380000.0, shape=(), dtype=float32)

tf.Tensor(425242700000.0, shape=(), dtype=float32)

tf.Tensor(425240000000.0, shape=(), dtype=float32)

tf.Tensor(425237280000.0, shape=(), dtype=float32)

tf.Tensor(425234560000.0, shape=(), dtype=float32)

tf.Tensor(425231800000.0, shape=(), dtype=float32)

tf.Tensor(425229100000.0, shape=(), dtype=float32)

tf.Tensor(425226400000.0, shape=(), dtype=float32)

tf.Tensor(425223700000.0, shape=(), dtype=float32)

tf.Tensor(425220930000.0, shape=(), dtype=float32)

tf.Tensor(425218240000.0, shape=(), dtype=float32)

tf.Tensor(425215560000.0, shape=(), dtype=float32)

tf.Tensor(425212900000.0, shape=(), dtype=float32)

tf.Tensor(425210220000.0, shape=(), dtype=float32)

tf.Tensor(425207430000.0, shape=(), dtype=float32)

tf.Tensor(425204740000.0, shape=(), dtype=float32)

tf.Tensor(425202060000.0, shape=(), dtype=float32)

tf.Tensor(425199270000.0, shape=(), dtype=float32)

tf.Tensor(425196600000.0, shape=(), dtype=float32)

tf.Tensor(425193870000.0, shape=(), dtype=float32)

tf.Tensor(425191150000.0, shape=(), dtype=float32)

tf.Tensor(425188400000.0, shape=(), dtype=float32)

tf.Tensor(425185670000.0, shape=(), dtype=float32)

tf.Tensor(425183000000.0, shape=(), dtype=float32)

tf.Tensor(425180300000.0, shape=(), dtype=float32)

tf.Tensor(425177600000.0, shape=(), dtype=float32)

tf.Tensor(425174830000.0, shape=(), dtype=float32)

tf.Tensor(425172140000.0, shape=(), dtype=float32)

tf.Tensor(425169500000.0, shape=(), dtype=float32)

tf.Tensor(425166730000.0, shape=(), dtype=float32)

tf.Tensor(425163950000.0, shape=(), dtype=float32)

tf.Tensor(425161260000.0, shape=(), dtype=float32)

tf.Tensor(425158540000.0, shape=(), dtype=float32)

tf.Tensor(425155820000.0, shape=(), dtype=float32)

tf.Tensor(425153070000.0, shape=(), dtype=float32)

tf.Tensor(425150380000.0, shape=(), dtype=float32)

tf.Tensor(425147700000.0, shape=(), dtype=float32)

tf.Tensor(425145040000.0, shape=(), dtype=float32)

tf.Tensor(425142350000.0, shape=(), dtype=float32)

tf.Tensor(425139570000.0, shape=(), dtype=float32)

tf.Tensor(425136800000.0, shape=(), dtype=float32)

tf.Tensor(425134100000.0, shape=(), dtype=float32)

tf.Tensor(425131300000.0, shape=(), dtype=float32)

tf.Tensor(425128700000.0, shape=(), dtype=float32)

tf.Tensor(425125940000.0, shape=(), dtype=float32)

tf.Tensor(425123300000.0, shape=(), dtype=float32)

tf.Tensor(425120600000.0, shape=(), dtype=float32)

tf.Tensor(425117900000.0, shape=(), dtype=float32)

tf.Tensor(425115220000.0, shape=(), dtype=float32)

tf.Tensor(425112440000.0, shape=(), dtype=float32)

tf.Tensor(425109750000.0, shape=(), dtype=float32)

tf.Tensor(425106960000.0, shape=(), dtype=float32)

tf.Tensor(425104240000.0, shape=(), dtype=float32)

tf.Tensor(425101500000.0, shape=(), dtype=float32)

tf.Tensor(425098870000.0, shape=(), dtype=float32)

tf.Tensor(425096150000.0, shape=(), dtype=float32)

tf.Tensor(425093460000.0, shape=(), dtype=float32)

tf.Tensor(425090780000.0, shape=(), dtype=float32)

tf.Tensor(425088000000.0, shape=(), dtype=float32)

tf.Tensor(425085300000.0, shape=(), dtype=float32)

tf.Tensor(425082520000.0, shape=(), dtype=float32)

tf.Tensor(425079900000.0, shape=(), dtype=float32)

tf.Tensor(425077100000.0, shape=(), dtype=float32)

tf.Tensor(425074400000.0, shape=(), dtype=float32)

tf.Tensor(425071700000.0, shape=(), dtype=float32)

tf.Tensor(425069020000.0, shape=(), dtype=float32)

tf.Tensor(425066300000.0, shape=(), dtype=float32)

tf.Tensor(425063550000.0, shape=(), dtype=float32)

tf.Tensor(425060860000.0, shape=(), dtype=float32)

tf.Tensor(425058140000.0, shape=(), dtype=float32)

tf.Tensor(425055520000.0, shape=(), dtype=float32)

tf.Tensor(425052830000.0, shape=(), dtype=float32)

tf.Tensor(425050050000.0, shape=(), dtype=float32)

tf.Tensor(425047300000.0, shape=(), dtype=float32)

tf.Tensor(425044570000.0, shape=(), dtype=float32)

tf.Tensor(425041850000.0, shape=(), dtype=float32)

tf.Tensor(425039100000.0, shape=(), dtype=float32)

tf.Tensor(425036400000.0, shape=(), dtype=float32)

tf.Tensor(425033700000.0, shape=(), dtype=float32)

tf.Tensor(425030980000.0, shape=(), dtype=float32)

tf.Tensor(425028300000.0, shape=(), dtype=float32)

tf.Tensor(425025600000.0, shape=(), dtype=float32)

tf.Tensor(425022850000.0, shape=(), dtype=float32)

tf.Tensor(425020130000.0, shape=(), dtype=float32)

tf.Tensor(425017400000.0, shape=(), dtype=float32)

tf.Tensor(425014660000.0, shape=(), dtype=float32)

tf.Tensor(425011970000.0, shape=(), dtype=float32)

tf.Tensor(425009250000.0, shape=(), dtype=float32)

tf.Tensor(425006630000.0, shape=(), dtype=float32)

tf.Tensor(425003840000.0, shape=(), dtype=float32)

tf.Tensor(425001160000.0, shape=(), dtype=float32)

tf.Tensor(424998370000.0, shape=(), dtype=float32)

tf.Tensor(424995750000.0, shape=(), dtype=float32)

tf.Tensor(424993000000.0, shape=(), dtype=float32)

tf.Tensor(424990280000.0, shape=(), dtype=float32)

tf.Tensor(424987660000.0, shape=(), dtype=float32)

tf.Tensor(424984870000.0, shape=(), dtype=float32)

tf.Tensor(424982200000.0, shape=(), dtype=float32)

tf.Tensor(424979500000.0, shape=(), dtype=float32)

tf.Tensor(424976800000.0, shape=(), dtype=float32)

tf.Tensor(424974020000.0, shape=(), dtype=float32)

tf.Tensor(424971300000.0, shape=(), dtype=float32)

tf.Tensor(424968550000.0, shape=(), dtype=float32)

tf.Tensor(424965870000.0, shape=(), dtype=float32)

tf.Tensor(424963200000.0, shape=(), dtype=float32)

tf.Tensor(424960430000.0, shape=(), dtype=float32)

tf.Tensor(424957740000.0, shape=(), dtype=float32)

tf.Tensor(424955050000.0, shape=(), dtype=float32)

tf.Tensor(424952370000.0, shape=(), dtype=float32)

tf.Tensor(424949650000.0, shape=(), dtype=float32)

tf.Tensor(424946900000.0, shape=(), dtype=float32)

tf.Tensor(424944200000.0, shape=(), dtype=float32)

tf.Tensor(424941450000.0, shape=(), dtype=float32)

tf.Tensor(424938770000.0, shape=(), dtype=float32)

tf.Tensor(424936000000.0, shape=(), dtype=float32)

tf.Tensor(424933300000.0, shape=(), dtype=float32)

tf.Tensor(424930600000.0, shape=(), dtype=float32)

tf.Tensor(424927920000.0, shape=(), dtype=float32)

tf.Tensor(424925230000.0, shape=(), dtype=float32)

tf.Tensor(424922500000.0, shape=(), dtype=float32)

tf.Tensor(424919760000.0, shape=(), dtype=float32)

tf.Tensor(424917040000.0, shape=(), dtype=float32)

tf.Tensor(424914350000.0, shape=(), dtype=float32)

tf.Tensor(424911630000.0, shape=(), dtype=float32)

tf.Tensor(424908880000.0, shape=(), dtype=float32)

tf.Tensor(424906160000.0, shape=(), dtype=float32)

tf.Tensor(424903480000.0, shape=(), dtype=float32)

tf.Tensor(424900700000.0, shape=(), dtype=float32)

tf.Tensor(424898000000.0, shape=(), dtype=float32)

tf.Tensor(424895350000.0, shape=(), dtype=float32)

tf.Tensor(424892600000.0, shape=(), dtype=float32)

tf.Tensor(424889880000.0, shape=(), dtype=float32)

tf.Tensor(424887200000.0, shape=(), dtype=float32)

tf.Tensor(424884500000.0, shape=(), dtype=float32)

tf.Tensor(424881800000.0, shape=(), dtype=float32)

tf.Tensor(424879100000.0, shape=(), dtype=float32)

tf.Tensor(424876340000.0, shape=(), dtype=float32)

tf.Tensor(424873620000.0, shape=(), dtype=float32)

tf.Tensor(424870900000.0, shape=(), dtype=float32)

tf.Tensor(424868220000.0, shape=(), dtype=float32)

tf.Tensor(424865460000.0, shape=(), dtype=float32)

tf.Tensor(424862740000.0, shape=(), dtype=float32)

tf.Tensor(424860060000.0, shape=(), dtype=float32)

tf.Tensor(424857370000.0, shape=(), dtype=float32)

tf.Tensor(424854650000.0, shape=(), dtype=float32)

tf.Tensor(424851900000.0, shape=(), dtype=float32)

tf.Tensor(424849180000.0, shape=(), dtype=float32)

tf.Tensor(424846460000.0, shape=(), dtype=float32)

tf.Tensor(424843770000.0, shape=(), dtype=float32)

tf.Tensor(424841100000.0, shape=(), dtype=float32)

tf.Tensor(424838400000.0, shape=(), dtype=float32)

tf.Tensor(424835700000.0, shape=(), dtype=float32)

tf.Tensor(424832930000.0, shape=(), dtype=float32)

tf.Tensor(424830240000.0, shape=(), dtype=float32)

tf.Tensor(424827600000.0, shape=(), dtype=float32)

tf.Tensor(424824830000.0, shape=(), dtype=float32)

tf.Tensor(424822050000.0, shape=(), dtype=float32)

tf.Tensor(424819330000.0, shape=(), dtype=float32)

tf.Tensor(424816640000.0, shape=(), dtype=float32)

tf.Tensor(424813950000.0, shape=(), dtype=float32)

tf.Tensor(424811270000.0, shape=(), dtype=float32)

tf.Tensor(424808480000.0, shape=(), dtype=float32)

tf.Tensor(424805800000.0, shape=(), dtype=float32)

tf.Tensor(424803040000.0, shape=(), dtype=float32)

tf.Tensor(424800350000.0, shape=(), dtype=float32)

tf.Tensor(424797670000.0, shape=(), dtype=float32)

tf.Tensor(424794900000.0, shape=(), dtype=float32)

tf.Tensor(424792260000.0, shape=(), dtype=float32)

tf.Tensor(424789500000.0, shape=(), dtype=float32)

tf.Tensor(424786820000.0, shape=(), dtype=float32)

tf.Tensor(424784130000.0, shape=(), dtype=float32)

tf.Tensor(424781480000.0, shape=(), dtype=float32)

tf.Tensor(424778730000.0, shape=(), dtype=float32)

tf.Tensor(424775900000.0, shape=(), dtype=float32)

tf.Tensor(424773260000.0, shape=(), dtype=float32)

tf.Tensor(424770540000.0, shape=(), dtype=float32)

tf.Tensor(424767850000.0, shape=(), dtype=float32)

tf.Tensor(424765160000.0, shape=(), dtype=float32)

tf.Tensor(424762440000.0, shape=(), dtype=float32)

tf.Tensor(424759720000.0, shape=(), dtype=float32)

tf.Tensor(424757040000.0, shape=(), dtype=float32)

tf.Tensor(424754350000.0, shape=(), dtype=float32)

tf.Tensor(424751560000.0, shape=(), dtype=float32)

tf.Tensor(424748880000.0, shape=(), dtype=float32)

tf.Tensor(424746100000.0, shape=(), dtype=float32)

tf.Tensor(424743370000.0, shape=(), dtype=float32)

tf.Tensor(424740720000.0, shape=(), dtype=float32)

tf.Tensor(424737930000.0, shape=(), dtype=float32)

tf.Tensor(424735380000.0, shape=(), dtype=float32)

tf.Tensor(424732600000.0, shape=(), dtype=float32)

tf.Tensor(424729900000.0, shape=(), dtype=float32)

tf.Tensor(424727200000.0, shape=(), dtype=float32)

tf.Tensor(424724430000.0, shape=(), dtype=float32)

tf.Tensor(424721740000.0, shape=(), dtype=float32)

tf.Tensor(424719060000.0, shape=(), dtype=float32)

tf.Tensor(424716270000.0, shape=(), dtype=float32)

tf.Tensor(424713550000.0, shape=(), dtype=float32)

tf.Tensor(424710930000.0, shape=(), dtype=float32)

tf.Tensor(424708150000.0, shape=(), dtype=float32)

tf.Tensor(424705500000.0, shape=(), dtype=float32)

tf.Tensor(424702770000.0, shape=(), dtype=float32)

tf.Tensor(424700100000.0, shape=(), dtype=float32)

tf.Tensor(424697400000.0, shape=(), dtype=float32)

tf.Tensor(424694600000.0, shape=(), dtype=float32)

tf.Tensor(424691960000.0, shape=(), dtype=float32)

tf.Tensor(424689270000.0, shape=(), dtype=float32)

tf.Tensor(424686520000.0, shape=(), dtype=float32)

tf.Tensor(424683800000.0, shape=(), dtype=float32)

tf.Tensor(424681050000.0, shape=(), dtype=float32)

tf.Tensor(424678330000.0, shape=(), dtype=float32)

tf.Tensor(424675600000.0, shape=(), dtype=float32)

tf.Tensor(424672850000.0, shape=(), dtype=float32)

tf.Tensor(424670130000.0, shape=(), dtype=float32)

tf.Tensor(424667450000.0, shape=(), dtype=float32)

tf.Tensor(424664830000.0, shape=(), dtype=float32)

tf.Tensor(424662140000.0, shape=(), dtype=float32)

tf.Tensor(424659350000.0, shape=(), dtype=float32)

tf.Tensor(424656570000.0, shape=(), dtype=float32)

tf.Tensor(424653880000.0, shape=(), dtype=float32)

tf.Tensor(424651200000.0, shape=(), dtype=float32)

tf.Tensor(424648500000.0, shape=(), dtype=float32)

tf.Tensor(424645800000.0, shape=(), dtype=float32)

tf.Tensor(424643070000.0, shape=(), dtype=float32)

tf.Tensor(424640380000.0, shape=(), dtype=float32)

tf.Tensor(424637700000.0, shape=(), dtype=float32)

tf.Tensor(424634970000.0, shape=(), dtype=float32)

tf.Tensor(424632220000.0, shape=(), dtype=float32)

tf.Tensor(424629500000.0, shape=(), dtype=float32)

tf.Tensor(424626800000.0, shape=(), dtype=float32)

tf.Tensor(424624130000.0, shape=(), dtype=float32)

tf.Tensor(424621500000.0, shape=(), dtype=float32)

tf.Tensor(424618720000.0, shape=(), dtype=float32)

tf.Tensor(424615970000.0, shape=(), dtype=float32)

tf.Tensor(424613250000.0, shape=(), dtype=float32)

tf.Tensor(424610530000.0, shape=(), dtype=float32)

tf.Tensor(424607840000.0, shape=(), dtype=float32)

tf.Tensor(424605100000.0, shape=(), dtype=float32)

tf.Tensor(424602400000.0, shape=(), dtype=float32)

tf.Tensor(424599750000.0, shape=(), dtype=float32)

tf.Tensor(424597000000.0, shape=(), dtype=float32)

tf.Tensor(424594280000.0, shape=(), dtype=float32)

tf.Tensor(424591600000.0, shape=(), dtype=float32)

tf.Tensor(424588870000.0, shape=(), dtype=float32)

tf.Tensor(424586180000.0, shape=(), dtype=float32)

tf.Tensor(424583430000.0, shape=(), dtype=float32)

tf.Tensor(424580740000.0, shape=(), dtype=float32)

tf.Tensor(424578000000.0, shape=(), dtype=float32)

tf.Tensor(424575300000.0, shape=(), dtype=float32)

tf.Tensor(424572620000.0, shape=(), dtype=float32)

tf.Tensor(424569860000.0, shape=(), dtype=float32)

tf.Tensor(424567240000.0, shape=(), dtype=float32)

tf.Tensor(424564520000.0, shape=(), dtype=float32)

tf.Tensor(424561770000.0, shape=(), dtype=float32)

tf.Tensor(424559050000.0, shape=(), dtype=float32)

tf.Tensor(424556430000.0, shape=(), dtype=float32)

tf.Tensor(424553640000.0, shape=(), dtype=float32)

tf.Tensor(424550960000.0, shape=(), dtype=float32)

tf.Tensor(424548200000.0, shape=(), dtype=float32)

tf.Tensor(424545500000.0, shape=(), dtype=float32)

tf.Tensor(424542800000.0, shape=(), dtype=float32)

tf.Tensor(424540000000.0, shape=(), dtype=float32)

tf.Tensor(424537400000.0, shape=(), dtype=float32)

tf.Tensor(424534640000.0, shape=(), dtype=float32)

tf.Tensor(424531900000.0, shape=(), dtype=float32)

tf.Tensor(424529200000.0, shape=(), dtype=float32)

tf.Tensor(424526500000.0, shape=(), dtype=float32)

tf.Tensor(424523830000.0, shape=(), dtype=float32)

tf.Tensor(424521140000.0, shape=(), dtype=float32)

tf.Tensor(424518420000.0, shape=(), dtype=float32)

tf.Tensor(424515630000.0, shape=(), dtype=float32)

tf.Tensor(424512950000.0, shape=(), dtype=float32)

tf.Tensor(424510320000.0, shape=(), dtype=float32)

tf.Tensor(424507640000.0, shape=(), dtype=float32)

tf.Tensor(424504850000.0, shape=(), dtype=float32)

tf.Tensor(424502100000.0, shape=(), dtype=float32)

tf.Tensor(424499380000.0, shape=(), dtype=float32)

tf.Tensor(424496700000.0, shape=(), dtype=float32)

tf.Tensor(424494000000.0, shape=(), dtype=float32)

tf.Tensor(424491300000.0, shape=(), dtype=float32)

tf.Tensor(424488570000.0, shape=(), dtype=float32)

tf.Tensor(424485800000.0, shape=(), dtype=float32)

tf.Tensor(424483100000.0, shape=(), dtype=float32)

tf.Tensor(424480400000.0, shape=(), dtype=float32)

tf.Tensor(424477720000.0, shape=(), dtype=float32)

tf.Tensor(424475000000.0, shape=(), dtype=float32)

tf.Tensor(424472250000.0, shape=(), dtype=float32)

tf.Tensor(424469560000.0, shape=(), dtype=float32)

tf.Tensor(424466870000.0, shape=(), dtype=float32)

tf.Tensor(424464150000.0, shape=(), dtype=float32)

tf.Tensor(424461430000.0, shape=(), dtype=float32)

tf.Tensor(424458680000.0, shape=(), dtype=float32)

tf.Tensor(424456060000.0, shape=(), dtype=float32)

tf.Tensor(424453280000.0, shape=(), dtype=float32)

tf.Tensor(424450560000.0, shape=(), dtype=float32)

tf.Tensor(424447870000.0, shape=(), dtype=float32)

tf.Tensor(424445120000.0, shape=(), dtype=float32)

tf.Tensor(424442560000.0, shape=(), dtype=float32)

tf.Tensor(424439780000.0, shape=(), dtype=float32)

tf.Tensor(424437100000.0, shape=(), dtype=float32)

tf.Tensor(424434340000.0, shape=(), dtype=float32)

tf.Tensor(424431680000.0, shape=(), dtype=float32)

tf.Tensor(424428930000.0, shape=(), dtype=float32)

tf.Tensor(424426240000.0, shape=(), dtype=float32)

tf.Tensor(424423460000.0, shape=(), dtype=float32)

tf.Tensor(424420800000.0, shape=(), dtype=float32)

tf.Tensor(424418020000.0, shape=(), dtype=float32)

tf.Tensor(424415330000.0, shape=(), dtype=float32)

tf.Tensor(424412640000.0, shape=(), dtype=float32)

tf.Tensor(424409960000.0, shape=(), dtype=float32)

tf.Tensor(424407330000.0, shape=(), dtype=float32)

tf.Tensor(424404500000.0, shape=(), dtype=float32)

tf.Tensor(424401800000.0, shape=(), dtype=float32)

tf.Tensor(424399100000.0, shape=(), dtype=float32)

tf.Tensor(424396400000.0, shape=(), dtype=float32)

tf.Tensor(424393700000.0, shape=(), dtype=float32)

tf.Tensor(424391020000.0, shape=(), dtype=float32)

tf.Tensor(424388300000.0, shape=(), dtype=float32)

tf.Tensor(424385500000.0, shape=(), dtype=float32)

tf.Tensor(424382820000.0, shape=(), dtype=float32)

tf.Tensor(424380040000.0, shape=(), dtype=float32)

tf.Tensor(424377350000.0, shape=(), dtype=float32)

tf.Tensor(424374700000.0, shape=(), dtype=float32)

tf.Tensor(424372000000.0, shape=(), dtype=float32)

tf.Tensor(424369230000.0, shape=(), dtype=float32)

tf.Tensor(424366540000.0, shape=(), dtype=float32)

tf.Tensor(424363850000.0, shape=(), dtype=float32)

tf.Tensor(424361130000.0, shape=(), dtype=float32)

tf.Tensor(424358450000.0, shape=(), dtype=float32)

tf.Tensor(424355760000.0, shape=(), dtype=float32)

tf.Tensor(424353070000.0, shape=(), dtype=float32)

tf.Tensor(424350300000.0, shape=(), dtype=float32)

tf.Tensor(424347600000.0, shape=(), dtype=float32)

tf.Tensor(424344880000.0, shape=(), dtype=float32)

tf.Tensor(424342200000.0, shape=(), dtype=float32)

tf.Tensor(424339500000.0, shape=(), dtype=float32)

tf.Tensor(424336820000.0, shape=(), dtype=float32)

tf.Tensor(424334100000.0, shape=(), dtype=float32)

tf.Tensor(424331350000.0, shape=(), dtype=float32)

tf.Tensor(424328630000.0, shape=(), dtype=float32)

tf.Tensor(424325900000.0, shape=(), dtype=float32)

tf.Tensor(424323220000.0, shape=(), dtype=float32)

tf.Tensor(424320530000.0, shape=(), dtype=float32)

tf.Tensor(424317800000.0, shape=(), dtype=float32)

tf.Tensor(424315130000.0, shape=(), dtype=float32)

tf.Tensor(424312370000.0, shape=(), dtype=float32)

tf.Tensor(424309650000.0, shape=(), dtype=float32)

tf.Tensor(424306970000.0, shape=(), dtype=float32)

tf.Tensor(424304250000.0, shape=(), dtype=float32)

tf.Tensor(424301560000.0, shape=(), dtype=float32)

tf.Tensor(424298870000.0, shape=(), dtype=float32)

tf.Tensor(424296200000.0, shape=(), dtype=float32)

tf.Tensor(424293400000.0, shape=(), dtype=float32)

tf.Tensor(424290700000.0, shape=(), dtype=float32)

tf.Tensor(424288030000.0, shape=(), dtype=float32)

tf.Tensor(424285300000.0, shape=(), dtype=float32)

tf.Tensor(424282600000.0, shape=(), dtype=float32)

tf.Tensor(424279900000.0, shape=(), dtype=float32)

tf.Tensor(424277150000.0, shape=(), dtype=float32)

tf.Tensor(424274430000.0, shape=(), dtype=float32)

tf.Tensor(424271740000.0, shape=(), dtype=float32)

tf.Tensor(424269050000.0, shape=(), dtype=float32)

tf.Tensor(424266270000.0, shape=(), dtype=float32)

tf.Tensor(424263650000.0, shape=(), dtype=float32)

tf.Tensor(424260830000.0, shape=(), dtype=float32)

tf.Tensor(424258240000.0, shape=(), dtype=float32)

tf.Tensor(424255460000.0, shape=(), dtype=float32)

tf.Tensor(424252700000.0, shape=(), dtype=float32)

tf.Tensor(424249980000.0, shape=(), dtype=float32)

tf.Tensor(424247300000.0, shape=(), dtype=float32)

tf.Tensor(424244600000.0, shape=(), dtype=float32)

tf.Tensor(424241920000.0, shape=(), dtype=float32)

tf.Tensor(424239200000.0, shape=(), dtype=float32)

tf.Tensor(424236500000.0, shape=(), dtype=float32)

tf.Tensor(424233830000.0, shape=(), dtype=float32)

tf.Tensor(424231100000.0, shape=(), dtype=float32)

tf.Tensor(424228400000.0, shape=(), dtype=float32)

tf.Tensor(424225730000.0, shape=(), dtype=float32)

tf.Tensor(424222950000.0, shape=(), dtype=float32)

tf.Tensor(424220260000.0, shape=(), dtype=float32)

tf.Tensor(424217540000.0, shape=(), dtype=float32)

tf.Tensor(424214860000.0, shape=(), dtype=float32)

tf.Tensor(424212140000.0, shape=(), dtype=float32)

tf.Tensor(424209450000.0, shape=(), dtype=float32)

tf.Tensor(424206760000.0, shape=(), dtype=float32)

tf.Tensor(424204040000.0, shape=(), dtype=float32)

tf.Tensor(424201300000.0, shape=(), dtype=float32)

tf.Tensor(424198500000.0, shape=(), dtype=float32)

tf.Tensor(424195850000.0, shape=(), dtype=float32)

tf.Tensor(424193100000.0, shape=(), dtype=float32)

tf.Tensor(424190480000.0, shape=(), dtype=float32)

tf.Tensor(424187700000.0, shape=(), dtype=float32)

tf.Tensor(424185000000.0, shape=(), dtype=float32)

tf.Tensor(424182320000.0, shape=(), dtype=float32)

tf.Tensor(424179630000.0, shape=(), dtype=float32)

tf.Tensor(424176900000.0, shape=(), dtype=float32)

tf.Tensor(424174220000.0, shape=(), dtype=float32)

tf.Tensor(424171500000.0, shape=(), dtype=float32)

tf.Tensor(424168850000.0, shape=(), dtype=float32)

tf.Tensor(424166130000.0, shape=(), dtype=float32)

tf.Tensor(424163380000.0, shape=(), dtype=float32)

tf.Tensor(424160660000.0, shape=(), dtype=float32)

tf.Tensor(424157970000.0, shape=(), dtype=float32)

tf.Tensor(424155280000.0, shape=(), dtype=float32)

tf.Tensor(424152560000.0, shape=(), dtype=float32)

tf.Tensor(424149840000.0, shape=(), dtype=float32)

tf.Tensor(424147160000.0, shape=(), dtype=float32)

tf.Tensor(424144470000.0, shape=(), dtype=float32)

tf.Tensor(424141700000.0, shape=(), dtype=float32)

tf.Tensor(424139000000.0, shape=(), dtype=float32)

tf.Tensor(424136300000.0, shape=(), dtype=float32)

tf.Tensor(424133530000.0, shape=(), dtype=float32)

tf.Tensor(424130840000.0, shape=(), dtype=float32)

tf.Tensor(424128100000.0, shape=(), dtype=float32)

tf.Tensor(424125430000.0, shape=(), dtype=float32)

tf.Tensor(424122700000.0, shape=(), dtype=float32)

tf.Tensor(424120030000.0, shape=(), dtype=float32)

tf.Tensor(424117300000.0, shape=(), dtype=float32)

tf.Tensor(424114650000.0, shape=(), dtype=float32)

tf.Tensor(424111870000.0, shape=(), dtype=float32)

tf.Tensor(424109180000.0, shape=(), dtype=float32)

tf.Tensor(424106520000.0, shape=(), dtype=float32)

tf.Tensor(424103770000.0, shape=(), dtype=float32)

tf.Tensor(424101050000.0, shape=(), dtype=float32)

tf.Tensor(424098370000.0, shape=(), dtype=float32)

tf.Tensor(424095600000.0, shape=(), dtype=float32)

tf.Tensor(424092900000.0, shape=(), dtype=float32)

tf.Tensor(424090200000.0, shape=(), dtype=float32)

tf.Tensor(424087620000.0, shape=(), dtype=float32)

tf.Tensor(424084830000.0, shape=(), dtype=float32)

tf.Tensor(424082100000.0, shape=(), dtype=float32)

tf.Tensor(424079400000.0, shape=(), dtype=float32)

tf.Tensor(424076640000.0, shape=(), dtype=float32)

tf.Tensor(424073920000.0, shape=(), dtype=float32)

tf.Tensor(424071230000.0, shape=(), dtype=float32)

tf.Tensor(424068550000.0, shape=(), dtype=float32)

tf.Tensor(424065830000.0, shape=(), dtype=float32)

tf.Tensor(424063140000.0, shape=(), dtype=float32)

tf.Tensor(424060420000.0, shape=(), dtype=float32)

tf.Tensor(424057730000.0, shape=(), dtype=float32)

tf.Tensor(424055050000.0, shape=(), dtype=float32)

tf.Tensor(424052300000.0, shape=(), dtype=float32)

tf.Tensor(424049570000.0, shape=(), dtype=float32)

tf.Tensor(424046900000.0, shape=(), dtype=float32)

tf.Tensor(424044200000.0, shape=(), dtype=float32)

tf.Tensor(424041400000.0, shape=(), dtype=float32)

tf.Tensor(424038760000.0, shape=(), dtype=float32)

tf.Tensor(424036070000.0, shape=(), dtype=float32)

tf.Tensor(424033320000.0, shape=(), dtype=float32)

tf.Tensor(424030600000.0, shape=(), dtype=float32)

tf.Tensor(424027900000.0, shape=(), dtype=float32)

tf.Tensor(424025230000.0, shape=(), dtype=float32)

tf.Tensor(424022540000.0, shape=(), dtype=float32)

tf.Tensor(424019850000.0, shape=(), dtype=float32)

tf.Tensor(424017070000.0, shape=(), dtype=float32)

tf.Tensor(424014400000.0, shape=(), dtype=float32)

tf.Tensor(424011730000.0, shape=(), dtype=float32)

tf.Tensor(424008940000.0, shape=(), dtype=float32)

tf.Tensor(424006200000.0, shape=(), dtype=float32)

tf.Tensor(424003530000.0, shape=(), dtype=float32)

tf.Tensor(424000780000.0, shape=(), dtype=float32)

tf.Tensor(423998060000.0, shape=(), dtype=float32)

tf.Tensor(423995400000.0, shape=(), dtype=float32)

tf.Tensor(423992750000.0, shape=(), dtype=float32)

tf.Tensor(423990000000.0, shape=(), dtype=float32)

tf.Tensor(423987380000.0, shape=(), dtype=float32)

tf.Tensor(423984700000.0, shape=(), dtype=float32)

tf.Tensor(423981900000.0, shape=(), dtype=float32)

tf.Tensor(423979220000.0, shape=(), dtype=float32)

tf.Tensor(423976440000.0, shape=(), dtype=float32)

tf.Tensor(423973850000.0, shape=(), dtype=float32)

tf.Tensor(423971100000.0, shape=(), dtype=float32)

tf.Tensor(423968400000.0, shape=(), dtype=float32)

tf.Tensor(423965620000.0, shape=(), dtype=float32)

tf.Tensor(423962940000.0, shape=(), dtype=float32)

tf.Tensor(423960200000.0, shape=(), dtype=float32)

tf.Tensor(423957530000.0, shape=(), dtype=float32)

tf.Tensor(423954740000.0, shape=(), dtype=float32)

tf.Tensor(423952100000.0, shape=(), dtype=float32)

tf.Tensor(423949370000.0, shape=(), dtype=float32)

tf.Tensor(423946680000.0, shape=(), dtype=float32)

tf.Tensor(423943960000.0, shape=(), dtype=float32)

tf.Tensor(423941280000.0, shape=(), dtype=float32)

tf.Tensor(423938600000.0, shape=(), dtype=float32)

tf.Tensor(423935900000.0, shape=(), dtype=float32)

tf.Tensor(423933120000.0, shape=(), dtype=float32)

tf.Tensor(423930430000.0, shape=(), dtype=float32)

tf.Tensor(423927780000.0, shape=(), dtype=float32)

tf.Tensor(423925000000.0, shape=(), dtype=float32)

tf.Tensor(423922400000.0, shape=(), dtype=float32)

tf.Tensor(423919600000.0, shape=(), dtype=float32)

tf.Tensor(423916930000.0, shape=(), dtype=float32)

tf.Tensor(423914240000.0, shape=(), dtype=float32)

tf.Tensor(423911460000.0, shape=(), dtype=float32)

tf.Tensor(423908770000.0, shape=(), dtype=float32)

tf.Tensor(423906080000.0, shape=(), dtype=float32)

tf.Tensor(423903430000.0, shape=(), dtype=float32)

tf.Tensor(423900640000.0, shape=(), dtype=float32)

tf.Tensor(423897960000.0, shape=(), dtype=float32)

tf.Tensor(423895200000.0, shape=(), dtype=float32)

tf.Tensor(423892550000.0, shape=(), dtype=float32)

tf.Tensor(423889860000.0, shape=(), dtype=float32)

tf.Tensor(423887100000.0, shape=(), dtype=float32)

tf.Tensor(423884420000.0, shape=(), dtype=float32)

tf.Tensor(423881670000.0, shape=(), dtype=float32)

tf.Tensor(423879000000.0, shape=(), dtype=float32)

tf.Tensor(423876300000.0, shape=(), dtype=float32)

tf.Tensor(423873600000.0, shape=(), dtype=float32)

tf.Tensor(423870900000.0, shape=(), dtype=float32)

tf.Tensor(423868200000.0, shape=(), dtype=float32)

tf.Tensor(423865450000.0, shape=(), dtype=float32)

tf.Tensor(423862760000.0, shape=(), dtype=float32)

tf.Tensor(423860000000.0, shape=(), dtype=float32)

tf.Tensor(423857320000.0, shape=(), dtype=float32)

tf.Tensor(423854640000.0, shape=(), dtype=float32)

tf.Tensor(423851950000.0, shape=(), dtype=float32)

tf.Tensor(423849230000.0, shape=(), dtype=float32)

tf.Tensor(423846480000.0, shape=(), dtype=float32)

tf.Tensor(423843800000.0, shape=(), dtype=float32)

tf.Tensor(423841100000.0, shape=(), dtype=float32)

tf.Tensor(423838320000.0, shape=(), dtype=float32)

tf.Tensor(423835660000.0, shape=(), dtype=float32)

tf.Tensor(423832980000.0, shape=(), dtype=float32)

tf.Tensor(423830300000.0, shape=(), dtype=float32)

tf.Tensor(423827540000.0, shape=(), dtype=float32)

tf.Tensor(423824820000.0, shape=(), dtype=float32)

tf.Tensor(423822130000.0, shape=(), dtype=float32)

tf.Tensor(423819400000.0, shape=(), dtype=float32)

tf.Tensor(423816720000.0, shape=(), dtype=float32)

tf.Tensor(423814000000.0, shape=(), dtype=float32)

tf.Tensor(423811320000.0, shape=(), dtype=float32)

tf.Tensor(423808630000.0, shape=(), dtype=float32)

tf.Tensor(423805880000.0, shape=(), dtype=float32)

tf.Tensor(423803220000.0, shape=(), dtype=float32)

tf.Tensor(423800470000.0, shape=(), dtype=float32)

tf.Tensor(423797800000.0, shape=(), dtype=float32)

tf.Tensor(423795100000.0, shape=(), dtype=float32)

tf.Tensor(423792350000.0, shape=(), dtype=float32)

tf.Tensor(423789660000.0, shape=(), dtype=float32)

tf.Tensor(423786970000.0, shape=(), dtype=float32)

tf.Tensor(423784220000.0, shape=(), dtype=float32)

tf.Tensor(423781600000.0, shape=(), dtype=float32)

tf.Tensor(423778800000.0, shape=(), dtype=float32)

tf.Tensor(423776120000.0, shape=(), dtype=float32)

tf.Tensor(423773440000.0, shape=(), dtype=float32)

tf.Tensor(423770720000.0, shape=(), dtype=float32)

tf.Tensor(423768100000.0, shape=(), dtype=float32)

tf.Tensor(423765200000.0, shape=(), dtype=float32)

tf.Tensor(423762560000.0, shape=(), dtype=float32)

tf.Tensor(423759840000.0, shape=(), dtype=float32)

tf.Tensor(423757220000.0, shape=(), dtype=float32)

tf.Tensor(423754470000.0, shape=(), dtype=float32)

tf.Tensor(423751780000.0, shape=(), dtype=float32)

tf.Tensor(423749060000.0, shape=(), dtype=float32)

tf.Tensor(423746370000.0, shape=(), dtype=float32)

tf.Tensor(423743550000.0, shape=(), dtype=float32)

tf.Tensor(423740900000.0, shape=(), dtype=float32)

tf.Tensor(423738180000.0, shape=(), dtype=float32)

tf.Tensor(423735500000.0, shape=(), dtype=float32)

tf.Tensor(423732870000.0, shape=(), dtype=float32)

tf.Tensor(423730120000.0, shape=(), dtype=float32)

tf.Tensor(423727330000.0, shape=(), dtype=float32)

tf.Tensor(423724680000.0, shape=(), dtype=float32)

tf.Tensor(423722000000.0, shape=(), dtype=float32)

tf.Tensor(423719300000.0, shape=(), dtype=float32)

tf.Tensor(423716620000.0, shape=(), dtype=float32)

tf.Tensor(423713830000.0, shape=(), dtype=float32)

tf.Tensor(423711150000.0, shape=(), dtype=float32)

tf.Tensor(423708430000.0, shape=(), dtype=float32)

tf.Tensor(423705670000.0, shape=(), dtype=float32)

tf.Tensor(423703020000.0, shape=(), dtype=float32)

tf.Tensor(423700330000.0, shape=(), dtype=float32)

tf.Tensor(423697550000.0, shape=(), dtype=float32)

tf.Tensor(423694860000.0, shape=(), dtype=float32)

tf.Tensor(423692170000.0, shape=(), dtype=float32)

tf.Tensor(423689500000.0, shape=(), dtype=float32)

tf.Tensor(423686770000.0, shape=(), dtype=float32)

tf.Tensor(423684100000.0, shape=(), dtype=float32)

tf.Tensor(423681400000.0, shape=(), dtype=float32)

tf.Tensor(423678770000.0, shape=(), dtype=float32)

tf.Tensor(423676000000.0, shape=(), dtype=float32)

tf.Tensor(423673300000.0, shape=(), dtype=float32)

tf.Tensor(423670500000.0, shape=(), dtype=float32)

tf.Tensor(423667830000.0, shape=(), dtype=float32)

tf.Tensor(423665100000.0, shape=(), dtype=float32)

tf.Tensor(423662420000.0, shape=(), dtype=float32)

tf.Tensor(423659670000.0, shape=(), dtype=float32)

tf.Tensor(423657000000.0, shape=(), dtype=float32)

tf.Tensor(423654330000.0, shape=(), dtype=float32)

tf.Tensor(423651570000.0, shape=(), dtype=float32)

tf.Tensor(423648950000.0, shape=(), dtype=float32)

tf.Tensor(423646230000.0, shape=(), dtype=float32)

tf.Tensor(423643480000.0, shape=(), dtype=float32)

tf.Tensor(423640800000.0, shape=(), dtype=float32)

tf.Tensor(423638070000.0, shape=(), dtype=float32)

tf.Tensor(423635350000.0, shape=(), dtype=float32)

tf.Tensor(423632670000.0, shape=(), dtype=float32)

tf.Tensor(423629880000.0, shape=(), dtype=float32)

tf.Tensor(423627230000.0, shape=(), dtype=float32)

tf.Tensor(423624600000.0, shape=(), dtype=float32)

tf.Tensor(423621820000.0, shape=(), dtype=float32)

tf.Tensor(423619130000.0, shape=(), dtype=float32)

tf.Tensor(423616450000.0, shape=(), dtype=float32)

tf.Tensor(423613800000.0, shape=(), dtype=float32)

tf.Tensor(423611000000.0, shape=(), dtype=float32)

tf.Tensor(423608250000.0, shape=(), dtype=float32)

tf.Tensor(423605630000.0, shape=(), dtype=float32)

tf.Tensor(423602850000.0, shape=(), dtype=float32)

tf.Tensor(423600230000.0, shape=(), dtype=float32)

tf.Tensor(423597540000.0, shape=(), dtype=float32)

tf.Tensor(423594800000.0, shape=(), dtype=float32)

tf.Tensor(423592070000.0, shape=(), dtype=float32)

tf.Tensor(423589350000.0, shape=(), dtype=float32)

tf.Tensor(423586660000.0, shape=(), dtype=float32)

tf.Tensor(423583970000.0, shape=(), dtype=float32)

tf.Tensor(423581300000.0, shape=(), dtype=float32)

tf.Tensor(423578570000.0, shape=(), dtype=float32)

tf.Tensor(423575800000.0, shape=(), dtype=float32)

tf.Tensor(423573130000.0, shape=(), dtype=float32)

tf.Tensor(423570400000.0, shape=(), dtype=float32)

tf.Tensor(423567700000.0, shape=(), dtype=float32)

tf.Tensor(423565000000.0, shape=(), dtype=float32)

tf.Tensor(423562250000.0, shape=(), dtype=float32)

tf.Tensor(423559600000.0, shape=(), dtype=float32)

tf.Tensor(423556840000.0, shape=(), dtype=float32)

tf.Tensor(423554150000.0, shape=(), dtype=float32)

tf.Tensor(423551470000.0, shape=(), dtype=float32)

tf.Tensor(423548750000.0, shape=(), dtype=float32)

tf.Tensor(423546130000.0, shape=(), dtype=float32)

tf.Tensor(423543370000.0, shape=(), dtype=float32)

tf.Tensor(423540700000.0, shape=(), dtype=float32)

tf.Tensor(423537970000.0, shape=(), dtype=float32)

tf.Tensor(423535280000.0, shape=(), dtype=float32)

tf.Tensor(423532560000.0, shape=(), dtype=float32)

tf.Tensor(423529800000.0, shape=(), dtype=float32)

tf.Tensor(423527120000.0, shape=(), dtype=float32)

tf.Tensor(423524470000.0, shape=(), dtype=float32)

tf.Tensor(423521780000.0, shape=(), dtype=float32)

tf.Tensor(423519000000.0, shape=(), dtype=float32)

tf.Tensor(423516300000.0, shape=(), dtype=float32)

tf.Tensor(423513600000.0, shape=(), dtype=float32)

tf.Tensor(423510840000.0, shape=(), dtype=float32)

tf.Tensor(423508200000.0, shape=(), dtype=float32)

tf.Tensor(423505460000.0, shape=(), dtype=float32)

tf.Tensor(423502800000.0, shape=(), dtype=float32)

tf.Tensor(423500050000.0, shape=(), dtype=float32)

tf.Tensor(423497370000.0, shape=(), dtype=float32)

tf.Tensor(423494650000.0, shape=(), dtype=float32)

tf.Tensor(423491930000.0, shape=(), dtype=float32)

tf.Tensor(423489240000.0, shape=(), dtype=float32)

tf.Tensor(423486550000.0, shape=(), dtype=float32)

1.0991763 1.09918843.6 多元回归

def loss_function2(beta0, beta1, beta2):

yhat = beta0 + beta1*size + beta2*size2

loss = tf.keras.losses.mse(price, yhat)

return lossfor i in range(1000):

opt.minimize(lambda: loss_function2(beta0, beta1, beta2), var_list=[beta0, beta1, beta2])

print(loss_function2(beta0, beta1, beta2))

print(beta0.numpy(), beta1.numpy(), beta2.numpy())tf.Tensor(424295700000.0, shape=(), dtype=float32)

tf.Tensor(424276920000.0, shape=(), dtype=float32)

tf.Tensor(424257220000.0, shape=(), dtype=float32)

tf.Tensor(424237070000.0, shape=(), dtype=float32)

tf.Tensor(424216560000.0, shape=(), dtype=float32)

tf.Tensor(424195850000.0, shape=(), dtype=float32)

tf.Tensor(424174780000.0, shape=(), dtype=float32)

tf.Tensor(424153740000.0, shape=(), dtype=float32)

tf.Tensor(424132540000.0, shape=(), dtype=float32)

tf.Tensor(424111370000.0, shape=(), dtype=float32)

tf.Tensor(424090100000.0, shape=(), dtype=float32)

tf.Tensor(424068740000.0, shape=(), dtype=float32)

tf.Tensor(424047380000.0, shape=(), dtype=float32)

tf.Tensor(424025900000.0, shape=(), dtype=float32)

tf.Tensor(424004520000.0, shape=(), dtype=float32)

tf.Tensor(423983150000.0, shape=(), dtype=float32)

tf.Tensor(423961760000.0, shape=(), dtype=float32)

tf.Tensor(423940300000.0, shape=(), dtype=float32)

tf.Tensor(423918730000.0, shape=(), dtype=float32)

tf.Tensor(423897300000.0, shape=(), dtype=float32)

tf.Tensor(423875900000.0, shape=(), dtype=float32)

tf.Tensor(423854340000.0, shape=(), dtype=float32)

tf.Tensor(423832900000.0, shape=(), dtype=float32)

tf.Tensor(423811400000.0, shape=(), dtype=float32)

tf.Tensor(423790050000.0, shape=(), dtype=float32)

tf.Tensor(423768520000.0, shape=(), dtype=float32)

tf.Tensor(423747060000.0, shape=(), dtype=float32)

tf.Tensor(423725560000.0, shape=(), dtype=float32)

tf.Tensor(423704200000.0, shape=(), dtype=float32)

tf.Tensor(423682740000.0, shape=(), dtype=float32)

tf.Tensor(423661340000.0, shape=(), dtype=float32)

tf.Tensor(423639880000.0, shape=(), dtype=float32)

tf.Tensor(423618540000.0, shape=(), dtype=float32)

tf.Tensor(423596980000.0, shape=(), dtype=float32)

tf.Tensor(423575620000.0, shape=(), dtype=float32)

tf.Tensor(423554150000.0, shape=(), dtype=float32)

tf.Tensor(423532900000.0, shape=(), dtype=float32)

tf.Tensor(423511520000.0, shape=(), dtype=float32)

tf.Tensor(423490220000.0, shape=(), dtype=float32)

tf.Tensor(423468830000.0, shape=(), dtype=float32)

tf.Tensor(423447460000.0, shape=(), dtype=float32)

tf.Tensor(423426130000.0, shape=(), dtype=float32)

tf.Tensor(423404830000.0, shape=(), dtype=float32)

tf.Tensor(423383500000.0, shape=(), dtype=float32)

tf.Tensor(423362200000.0, shape=(), dtype=float32)

tf.Tensor(423340830000.0, shape=(), dtype=float32)

tf.Tensor(423319670000.0, shape=(), dtype=float32)

tf.Tensor(423298270000.0, shape=(), dtype=float32)

tf.Tensor(423277040000.0, shape=(), dtype=float32)

tf.Tensor(423255740000.0, shape=(), dtype=float32)

tf.Tensor(423234500000.0, shape=(), dtype=float32)

tf.Tensor(423213270000.0, shape=(), dtype=float32)

tf.Tensor(423192000000.0, shape=(), dtype=float32)

tf.Tensor(423170740000.0, shape=(), dtype=float32)

tf.Tensor(423149570000.0, shape=(), dtype=float32)

tf.Tensor(423128300000.0, shape=(), dtype=float32)

tf.Tensor(423107130000.0, shape=(), dtype=float32)

tf.Tensor(423085870000.0, shape=(), dtype=float32)

tf.Tensor(423064670000.0, shape=(), dtype=float32)

tf.Tensor(423043560000.0, shape=(), dtype=float32)

tf.Tensor(423022400000.0, shape=(), dtype=float32)

tf.Tensor(423001230000.0, shape=(), dtype=float32)

tf.Tensor(422980000000.0, shape=(), dtype=float32)

tf.Tensor(422958900000.0, shape=(), dtype=float32)

tf.Tensor(422937720000.0, shape=(), dtype=float32)

tf.Tensor(422916620000.0, shape=(), dtype=float32)

tf.Tensor(422895500000.0, shape=(), dtype=float32)

tf.Tensor(422874350000.0, shape=(), dtype=float32)

tf.Tensor(422853280000.0, shape=(), dtype=float32)

tf.Tensor(422832100000.0, shape=(), dtype=float32)

tf.Tensor(422811040000.0, shape=(), dtype=float32)

tf.Tensor(422789970000.0, shape=(), dtype=float32)

tf.Tensor(422768870000.0, shape=(), dtype=float32)

tf.Tensor(422747800000.0, shape=(), dtype=float32)

tf.Tensor(422726730000.0, shape=(), dtype=float32)

tf.Tensor(422705730000.0, shape=(), dtype=float32)

tf.Tensor(422684660000.0, shape=(), dtype=float32)

tf.Tensor(422663650000.0, shape=(), dtype=float32)

tf.Tensor(422642500000.0, shape=(), dtype=float32)

tf.Tensor(422621580000.0, shape=(), dtype=float32)

tf.Tensor(422600540000.0, shape=(), dtype=float32)

tf.Tensor(422579570000.0, shape=(), dtype=float32)

tf.Tensor(422558530000.0, shape=(), dtype=float32)

tf.Tensor(422537500000.0, shape=(), dtype=float32)

tf.Tensor(422516500000.0, shape=(), dtype=float32)

tf.Tensor(422495600000.0, shape=(), dtype=float32)

tf.Tensor(422474500000.0, shape=(), dtype=float32)

tf.Tensor(422453600000.0, shape=(), dtype=float32)

tf.Tensor(422432570000.0, shape=(), dtype=float32)

tf.Tensor(422411600000.0, shape=(), dtype=float32)

tf.Tensor(422390760000.0, shape=(), dtype=float32)

tf.Tensor(422369800000.0, shape=(), dtype=float32)

tf.Tensor(422348880000.0, shape=(), dtype=float32)

tf.Tensor(422327900000.0, shape=(), dtype=float32)

tf.Tensor(422306970000.0, shape=(), dtype=float32)

tf.Tensor(422286130000.0, shape=(), dtype=float32)

tf.Tensor(422265200000.0, shape=(), dtype=float32)

tf.Tensor(422244300000.0, shape=(), dtype=float32)

tf.Tensor(422223300000.0, shape=(), dtype=float32)

tf.Tensor(422202500000.0, shape=(), dtype=float32)

tf.Tensor(422181700000.0, shape=(), dtype=float32)

tf.Tensor(422160730000.0, shape=(), dtype=float32)

tf.Tensor(422139920000.0, shape=(), dtype=float32)

tf.Tensor(422118980000.0, shape=(), dtype=float32)

tf.Tensor(422098170000.0, shape=(), dtype=float32)

tf.Tensor(422077270000.0, shape=(), dtype=float32)

tf.Tensor(422056460000.0, shape=(), dtype=float32)

tf.Tensor(422035700000.0, shape=(), dtype=float32)

tf.Tensor(422014780000.0, shape=(), dtype=float32)

tf.Tensor(421994040000.0, shape=(), dtype=float32)

tf.Tensor(421973200000.0, shape=(), dtype=float32)

tf.Tensor(421952420000.0, shape=(), dtype=float32)

tf.Tensor(421931600000.0, shape=(), dtype=float32)

tf.Tensor(421910840000.0, shape=(), dtype=float32)

tf.Tensor(421890030000.0, shape=(), dtype=float32)

tf.Tensor(421869260000.0, shape=(), dtype=float32)

tf.Tensor(421848480000.0, shape=(), dtype=float32)

tf.Tensor(421827770000.0, shape=(), dtype=float32)

tf.Tensor(421807000000.0, shape=(), dtype=float32)

tf.Tensor(421786120000.0, shape=(), dtype=float32)

tf.Tensor(421765480000.0, shape=(), dtype=float32)

tf.Tensor(421744740000.0, shape=(), dtype=float32)

tf.Tensor(421724030000.0, shape=(), dtype=float32)

tf.Tensor(421703300000.0, shape=(), dtype=float32)

tf.Tensor(421682500000.0, shape=(), dtype=float32)

tf.Tensor(421661840000.0, shape=(), dtype=float32)

tf.Tensor(421641130000.0, shape=(), dtype=float32)

tf.Tensor(421620450000.0, shape=(), dtype=float32)

tf.Tensor(421599770000.0, shape=(), dtype=float32)

tf.Tensor(421579060000.0, shape=(), dtype=float32)

tf.Tensor(421558400000.0, shape=(), dtype=float32)

tf.Tensor(421537740000.0, shape=(), dtype=float32)

tf.Tensor(421517070000.0, shape=(), dtype=float32)

tf.Tensor(421496400000.0, shape=(), dtype=float32)

tf.Tensor(421475750000.0, shape=(), dtype=float32)

tf.Tensor(421455070000.0, shape=(), dtype=float32)

tf.Tensor(421434500000.0, shape=(), dtype=float32)

tf.Tensor(421413850000.0, shape=(), dtype=float32)

tf.Tensor(421393200000.0, shape=(), dtype=float32)

tf.Tensor(421372500000.0, shape=(), dtype=float32)

tf.Tensor(421351900000.0, shape=(), dtype=float32)

tf.Tensor(421331270000.0, shape=(), dtype=float32)

tf.Tensor(421310700000.0, shape=(), dtype=float32)

tf.Tensor(421290100000.0, shape=(), dtype=float32)

tf.Tensor(421269570000.0, shape=(), dtype=float32)

tf.Tensor(421248900000.0, shape=(), dtype=float32)

tf.Tensor(421228300000.0, shape=(), dtype=float32)

tf.Tensor(421207770000.0, shape=(), dtype=float32)

tf.Tensor(421187300000.0, shape=(), dtype=float32)

tf.Tensor(421166600000.0, shape=(), dtype=float32)

tf.Tensor(421146170000.0, shape=(), dtype=float32)

tf.Tensor(421125520000.0, shape=(), dtype=float32)

tf.Tensor(421105000000.0, shape=(), dtype=float32)

tf.Tensor(421084560000.0, shape=(), dtype=float32)

tf.Tensor(421064050000.0, shape=(), dtype=float32)

tf.Tensor(421043500000.0, shape=(), dtype=float32)

tf.Tensor(421022900000.0, shape=(), dtype=float32)

tf.Tensor(421002440000.0, shape=(), dtype=float32)

tf.Tensor(420981960000.0, shape=(), dtype=float32)

tf.Tensor(420961450000.0, shape=(), dtype=float32)

tf.Tensor(420940840000.0, shape=(), dtype=float32)

tf.Tensor(420920460000.0, shape=(), dtype=float32)

tf.Tensor(420899980000.0, shape=(), dtype=float32)

tf.Tensor(420879530000.0, shape=(), dtype=float32)

tf.Tensor(420859120000.0, shape=(), dtype=float32)

tf.Tensor(420838540000.0, shape=(), dtype=float32)

tf.Tensor(420818120000.0, shape=(), dtype=float32)

tf.Tensor(420797700000.0, shape=(), dtype=float32)

tf.Tensor(420777260000.0, shape=(), dtype=float32)

tf.Tensor(420756750000.0, shape=(), dtype=float32)

tf.Tensor(420736400000.0, shape=(), dtype=float32)

tf.Tensor(420716020000.0, shape=(), dtype=float32)

tf.Tensor(420695540000.0, shape=(), dtype=float32)

tf.Tensor(420675100000.0, shape=(), dtype=float32)

tf.Tensor(420654700000.0, shape=(), dtype=float32)

tf.Tensor(420634300000.0, shape=(), dtype=float32)

tf.Tensor(420613880000.0, shape=(), dtype=float32)

tf.Tensor(420593500000.0, shape=(), dtype=float32)

tf.Tensor(420573150000.0, shape=(), dtype=float32)

tf.Tensor(420552770000.0, shape=(), dtype=float32)

tf.Tensor(420532360000.0, shape=(), dtype=float32)

tf.Tensor(420512070000.0, shape=(), dtype=float32)

tf.Tensor(420491720000.0, shape=(), dtype=float32)

tf.Tensor(420471400000.0, shape=(), dtype=float32)

tf.Tensor(420451020000.0, shape=(), dtype=float32)

tf.Tensor(420430640000.0, shape=(), dtype=float32)

tf.Tensor(420410330000.0, shape=(), dtype=float32)

tf.Tensor(420390000000.0, shape=(), dtype=float32)

tf.Tensor(420369700000.0, shape=(), dtype=float32)

tf.Tensor(420349350000.0, shape=(), dtype=float32)

tf.Tensor(420329060000.0, shape=(), dtype=float32)

tf.Tensor(420308750000.0, shape=(), dtype=float32)

tf.Tensor(420288460000.0, shape=(), dtype=float32)

tf.Tensor(420268200000.0, shape=(), dtype=float32)

tf.Tensor(420247900000.0, shape=(), dtype=float32)

tf.Tensor(420227600000.0, shape=(), dtype=float32)

tf.Tensor(420207400000.0, shape=(), dtype=float32)

tf.Tensor(420187080000.0, shape=(), dtype=float32)

tf.Tensor(420166760000.0, shape=(), dtype=float32)

tf.Tensor(420146540000.0, shape=(), dtype=float32)

tf.Tensor(420126330000.0, shape=(), dtype=float32)

tf.Tensor(420106100000.0, shape=(), dtype=float32)

tf.Tensor(420085830000.0, shape=(), dtype=float32)

tf.Tensor(420065600000.0, shape=(), dtype=float32)

tf.Tensor(420045400000.0, shape=(), dtype=float32)

tf.Tensor(420025170000.0, shape=(), dtype=float32)

tf.Tensor(420004900000.0, shape=(), dtype=float32)

tf.Tensor(419984830000.0, shape=(), dtype=float32)

tf.Tensor(419964550000.0, shape=(), dtype=float32)

tf.Tensor(419944330000.0, shape=(), dtype=float32)

tf.Tensor(419924200000.0, shape=(), dtype=float32)

tf.Tensor(419904000000.0, shape=(), dtype=float32)

tf.Tensor(419883780000.0, shape=(), dtype=float32)

tf.Tensor(419863600000.0, shape=(), dtype=float32)

tf.Tensor(419843540000.0, shape=(), dtype=float32)

tf.Tensor(419823350000.0, shape=(), dtype=float32)

tf.Tensor(419803140000.0, shape=(), dtype=float32)

tf.Tensor(419783020000.0, shape=(), dtype=float32)

tf.Tensor(419762960000.0, shape=(), dtype=float32)

tf.Tensor(419742740000.0, shape=(), dtype=float32)

tf.Tensor(419722720000.0, shape=(), dtype=float32)

tf.Tensor(419702440000.0, shape=(), dtype=float32)

tf.Tensor(419682400000.0, shape=(), dtype=float32)

tf.Tensor(419662300000.0, shape=(), dtype=float32)

tf.Tensor(419642150000.0, shape=(), dtype=float32)

tf.Tensor(419622060000.0, shape=(), dtype=float32)

tf.Tensor(419602100000.0, shape=(), dtype=float32)

tf.Tensor(419581920000.0, shape=(), dtype=float32)

tf.Tensor(419561800000.0, shape=(), dtype=float32)

tf.Tensor(419541780000.0, shape=(), dtype=float32)

tf.Tensor(419521700000.0, shape=(), dtype=float32)

tf.Tensor(419501570000.0, shape=(), dtype=float32)

tf.Tensor(419481550000.0, shape=(), dtype=float32)

tf.Tensor(419461500000.0, shape=(), dtype=float32)

tf.Tensor(419441400000.0, shape=(), dtype=float32)

tf.Tensor(419421360000.0, shape=(), dtype=float32)

tf.Tensor(419401370000.0, shape=(), dtype=float32)

tf.Tensor(419381350000.0, shape=(), dtype=float32)

tf.Tensor(419361330000.0, shape=(), dtype=float32)

tf.Tensor(419341270000.0, shape=(), dtype=float32)

tf.Tensor(419321250000.0, shape=(), dtype=float32)

tf.Tensor(419301260000.0, shape=(), dtype=float32)

tf.Tensor(419281300000.0, shape=(), dtype=float32)

tf.Tensor(419261380000.0, shape=(), dtype=float32)

tf.Tensor(419241300000.0, shape=(), dtype=float32)

tf.Tensor(419221440000.0, shape=(), dtype=float32)

tf.Tensor(419201350000.0, shape=(), dtype=float32)

tf.Tensor(419181460000.0, shape=(), dtype=float32)

tf.Tensor(419161440000.0, shape=(), dtype=float32)

tf.Tensor(419141450000.0, shape=(), dtype=float32)

tf.Tensor(419121600000.0, shape=(), dtype=float32)

tf.Tensor(419101600000.0, shape=(), dtype=float32)

tf.Tensor(419081600000.0, shape=(), dtype=float32)

tf.Tensor(419061660000.0, shape=(), dtype=float32)

tf.Tensor(419041800000.0, shape=(), dtype=float32)

tf.Tensor(419021800000.0, shape=(), dtype=float32)

tf.Tensor(419002000000.0, shape=(), dtype=float32)

tf.Tensor(418981970000.0, shape=(), dtype=float32)

tf.Tensor(418962100000.0, shape=(), dtype=float32)

tf.Tensor(418942220000.0, shape=(), dtype=float32)

tf.Tensor(418922270000.0, shape=(), dtype=float32)

tf.Tensor(418902470000.0, shape=(), dtype=float32)

tf.Tensor(418882600000.0, shape=(), dtype=float32)

tf.Tensor(418862700000.0, shape=(), dtype=float32)

tf.Tensor(418842770000.0, shape=(), dtype=float32)

tf.Tensor(418822950000.0, shape=(), dtype=float32)

tf.Tensor(418803120000.0, shape=(), dtype=float32)

tf.Tensor(418783300000.0, shape=(), dtype=float32)

tf.Tensor(418763370000.0, shape=(), dtype=float32)

tf.Tensor(418743520000.0, shape=(), dtype=float32)

tf.Tensor(418723630000.0, shape=(), dtype=float32)

tf.Tensor(418703870000.0, shape=(), dtype=float32)

tf.Tensor(418684100000.0, shape=(), dtype=float32)

tf.Tensor(418664220000.0, shape=(), dtype=float32)

tf.Tensor(418644460000.0, shape=(), dtype=float32)

tf.Tensor(418624570000.0, shape=(), dtype=float32)

tf.Tensor(418604800000.0, shape=(), dtype=float32)

tf.Tensor(418585020000.0, shape=(), dtype=float32)

tf.Tensor(418565260000.0, shape=(), dtype=float32)

tf.Tensor(418545430000.0, shape=(), dtype=float32)

tf.Tensor(418525700000.0, shape=(), dtype=float32)

tf.Tensor(418505900000.0, shape=(), dtype=float32)

tf.Tensor(418486160000.0, shape=(), dtype=float32)

tf.Tensor(418466330000.0, shape=(), dtype=float32)

tf.Tensor(418446640000.0, shape=(), dtype=float32)

tf.Tensor(418426850000.0, shape=(), dtype=float32)

tf.Tensor(418407200000.0, shape=(), dtype=float32)

tf.Tensor(418387360000.0, shape=(), dtype=float32)